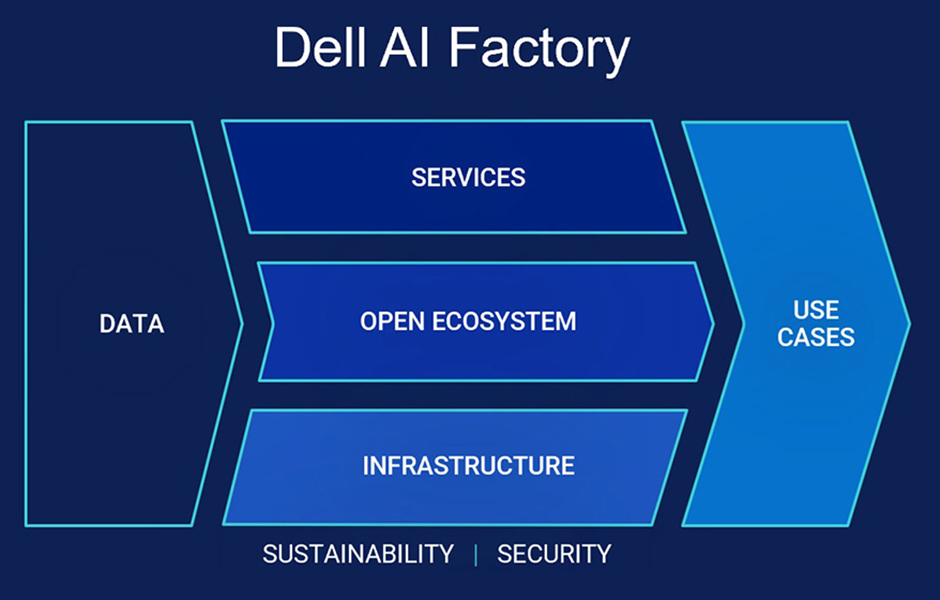

Dell Technologies improvises Dell AI Factory approach; integrates tightly AI infrastructure, solutions and services

Digital Edge Bureau 20 May, 2025 0 comment(s)

Dell Technologies, the leading IT products & solutions provider to new-age enterprises, has improvised and widened the scope of its Dell AI Factory approach – which encompasses in its fold energy-efficient AI infrastructure, integrated partner ecosystem and professional services to drive simpler and faster AI deployments.

Dell Technologies’ extreme focus on AI carries an explanation that it (AI) has become an essential part of businesses – with 75 percent of them saying AI is key to their strategy and 65 percent successfully moving AI projects into production. However, challenges like data quality, security concerns and high costs can slow progress.

Cost effective approach

The Dell AI Factory approach can be up to 62 percent more cost effective for inferencing LLMs (large language models) on-premises than the public cloud and helps organizations securely and easily deploy enterprise AI workloads at any scale. Dell offers the industry’s most comprehensive AI portfolio designed for deployments across client devices, data centers, edge locations and clouds.

Dell AI factory paraphernalia

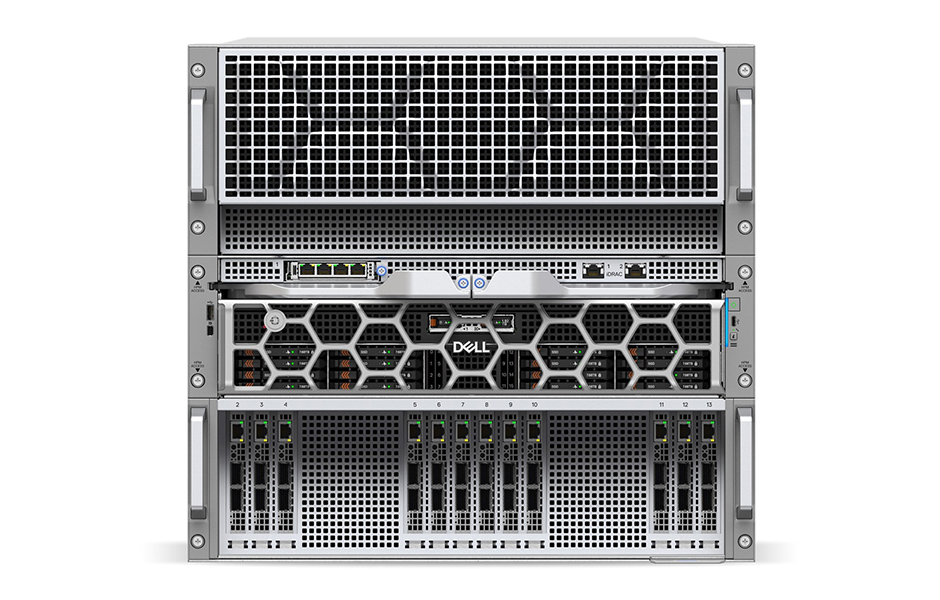

Dell introduces end-to-end AI infrastructure to support everything from edge inferencing on an AI PC to managing massive enterprise AI workloads in the data center.

For instance, Dell Pro Max Plus laptop with Qualcomm AI 100 PC Inference Card is the world’s first mobile workstation with an enterprise-grade discrete NPU. It offers fast and secure on-device inferencing at the edge for large AI models typically run in the cloud, such as today’s 109-billion-parameter model.

The Qualcomm AI 100 PC Inference Card features 32 AI-cores and 64GB memory, providing power to meet the needs of AI engineers and data scientists deploying large models for edge inferencing.

Also, Dell PowerCool Enclosed Rear Door Heat Exchanger (eRDHx) is a Dell-engineered alternative to standard rear door heat exchangers. Designed to capture 100 percent of IT heat generated with its self-contained airflow system, the eRDHx can reduce cooling energy costs by up to 60 percentcompared to currently available solutions.

With Dell’s factory integrated IR7000 racks equipped with future-ready eRDHx technology, organizations can significantly cut costs and eliminate reliance on expensive chillers given the eRDHx operates with water temperatures warmer than traditional solutions (between 32 and 36 degrees Celsius). This can maximize data center capacity by deploying up to 16 percent more racks of dense compute, without increasing power consumption.

The solution enables air cooling capacity up to 80 kW per rack for dense AI and HPC deployments. At the same time, this solutions reduces risk with advanced leak detection, real-time thermal monitoring and unified management of all rack-level components with the Dell Integrated Rack Controller.

On the other hand, Dell PowerEdge XE9785 and XE9785L servers will support AMD Instinct MI350 series GPUs with 288 GB of HBM3e memory per GPU and up to 35 times greater inferencing performance. Available in liquid-cooled and air-cooled configurations, the servers will reduce facility cooling energy costs.

Dell AI Data Platform

Because AI is only as powerful as the data that fuels it, organizations need a platform designed for performance and scalability. The Dell AI Data Platform updates improve access to high quality structured, semi-structured and unstructured data across the AI lifecycle.

In this regard, Dell Project Lightning is the world’s fastest parallel file system per new testing, delivering up to two times greater throughput than competing parallel file systems. Project Lightning will accelerate training time for large-scale and complex AI workflows.

Whereas, Dell Data Lakehouse enhancements simplify AI workflows and accelerate use cases — such as recommendation engines, semantic search and customer intent detection by creating and querying AI-ready datasets.

“We’re excited to work with Dell to support our cutting-edge AI initiatives, and we expect Project Lightning to be a critical storage technology for our AI innovations,” said Dr. Paul Calleja, director, Cambridge Open Zettascale Lab and Research Computing Services, University of Cambridge.

Dell expands AI partner ecosystem

Dell is collaborating with AI ecosystem players to deliver tailored solutions that simply and quickly integrate into organizations’ existing IT environments. Organizations can enable intelligent, autonomous workflows with a first-of-its-kind on-premises deployment of Cohere North, which integrates various data sources while ensuring control over operations.

Also, they can Innovate where the data is with Google Gemini and Google Distributed Cloud on-premises available on Dell PowerEdge XE9680 and XE9780 servers. They can prototype and build agent-based enterprise AI applications with Dell AI Solutions with Llama, using Meta’s latest Llama Stack distribution and Llama 4 models.

They can securely run scalable AI agents and enterprise search on-premises with Glean. Dell and Glean’s collaboration will deliver the first on-premises deployment architecture for Glean’s Work AI platform. And finally, they build and deploy secure, customizable AI applications and knowledge management workflows with solutions jointly engineered by Dell and Mistral AI.

The Dell AI Factory also expands to include advancements to the Dell AI Platform with AMD add 200G of storage networking and an upgraded AMD ROCm open software stack for organizations to simplify workflows, support LLMs and efficiently manage complex workloads.

Dell and AMD are collaborating to provide Day 0 support and performance optimized containers for AI models such as Llama 4. The new Dell AI Platform with Intel helps enterprises deploy a full stack of high performance, scalable AI infrastructure with Intel Gaudi 3 AI accelerators.